Making aerial fiber deployment faster and more e...

Learn more about how and what Facebook builds across its engineering efforts, including highlights from our recent work in open source, infrastructure engineering, AI, AR/VR, and video, as well as talks from events like F8 and @Scale.

Connectivity and Data Centers

( videos)Making aerial fiber deployment faster and more e...

Inside Facebook Data Centers

Building Fiber Backhaul Connectivity in Uganda

Terragraph Engineering

Video Engineering

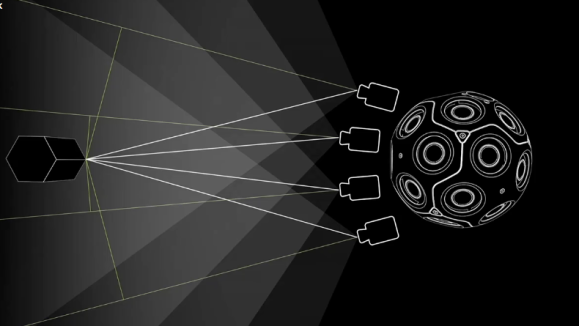

( videos)Surround 360: Beyond Stereo 360 Cameras

GroupLove: Welcome to Your Life - shot on Surrou...

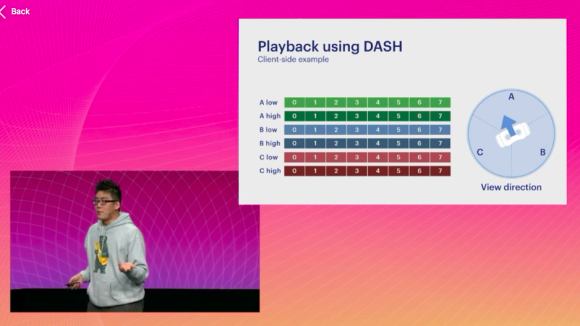

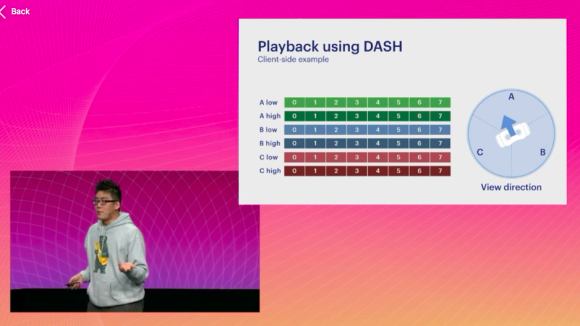

Optimizing 360 Video for Oculus

Encoding for 360 Video and VR

Technology Demonstrations

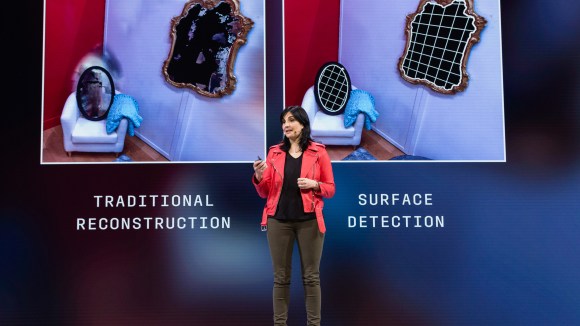

( videos)DensePose: Creating a 3D surface on top of movin...

Realistic 3D Model of Physical Spaces

Demonstrating photorealistic 3D avatars

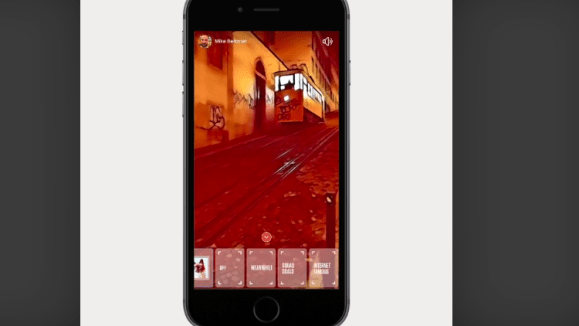

SLAM - Heather Day AR Art

Video Style Transfer Demo

Artificial Intelligence

( videos)Speeding up AI development and collaboration wit...

F8 2018 Day 2 Keynote Speakers

Computer Vision Research at Facebook

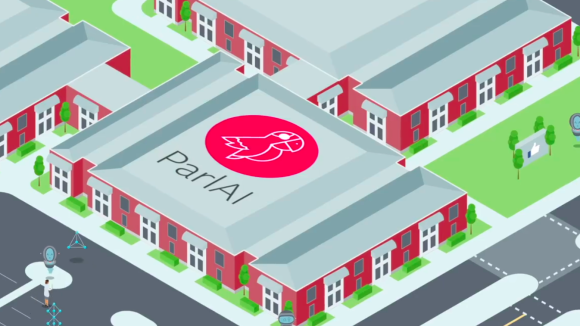

ParlAI

A Look at Facebook AI Research (FAIR) Paris

Teaching machines to see and understand

Virtual and Augmented Reality

( videos)F8 2018 Highlights

F8 2018 Virtual Reality

F8 2018 Day 1 Keynote

SLAM - Heather Day AR Art

Optimizing 360 Video for Oculus

Solving the thundering herd problem

Infrastructure Systems

( videos)Performance @Scale 2018 - Opening Remarks

Inside Facebook Data Centers

Terragraph Engineering

F8 Keynotes and Speeches

( videos)F8 2018 Highlights

F8 2018 Virtual Reality

F8 2018 Day 2 Keynote Speakers

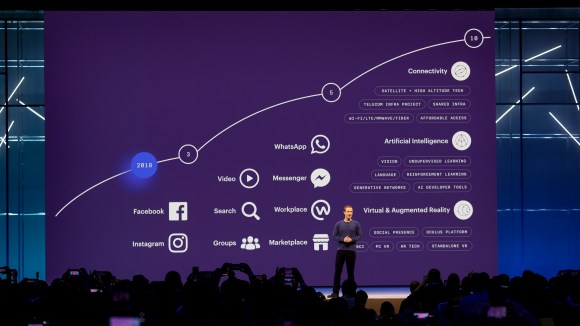

F8 2018 Day 1 Keynote

F8 Keynote - Jay Parikh

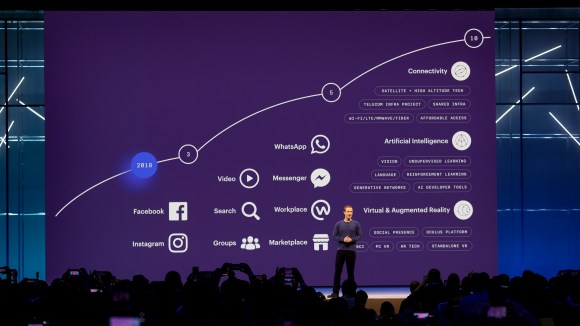

F8 2016 Keynote

Facebook Engineering

( videos)ParlAI

Disaggregate: Networking - Experiences Deploying...

F8 2016 keynote - Yaser Sheikh