When it comes to optimizing data centers for energy usage, the minutest changes can have significant impact. Facebook’s growth over the years has expanded our data center footprint greatly, and we’ve learned many lessons and applied some of the industry’s best practices to make our data centers much more efficient, saving us money and using less energy. At the Silicon Valley Leadership Group’s Data Center Efficiency Summit last week, we shared these lessons and the new strategies we’ve implemented with the data center community at large so they too can utilize these techniques, multiplying the energy savings and environmental protection across the infrastructure of many other companies.

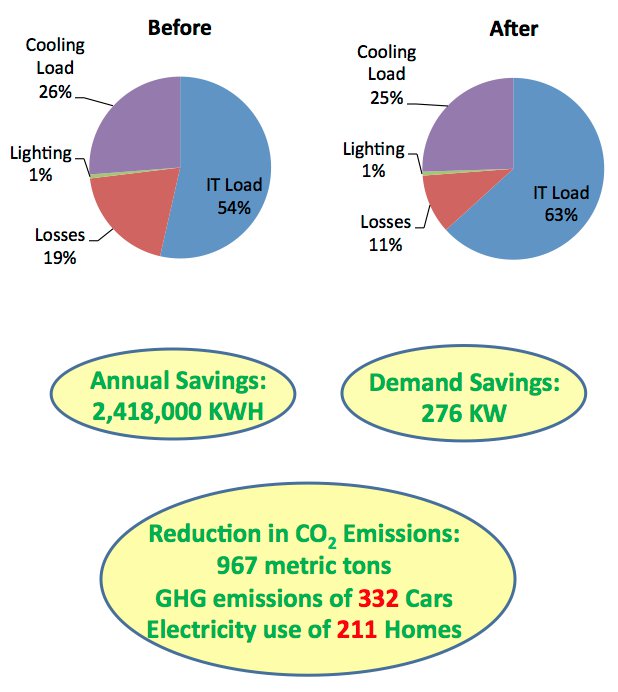

With our new strategies in place, within one of our data centers we’re saving almost 2.5 million kilowatt hours annually, and reducing our energy demand by 276 kilowatts. In economic terms, this translates to an annual cost savings of almost $230,000. Environmentally, we’ve reduced the amount of greenhouse gases produced by 967 metric tons annually, which is the equivalent to the emissions of 332 cars or the electricity usage of 211 homes, using EPA calculations.

We’re realizing these savings by addressing the following issues:

- Inefficient airflow distribution

- Excessive cooling

- Low rack inlet and chiller water temperatures

Improving Airflow Distribution

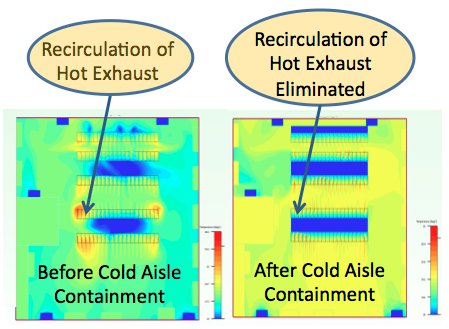

We conducted our own internal computational fluid dynamics analysis that resulted in us instituting a number of improvements to make airflow distribution more efficient, most notably by rolling out cold aisle containment throughout the facility. By enclosing the cold aisles within each server room, we can more efficiently direct the air flow and control the temperatures in the data center by eliminating the bypass of cold air and the infiltration of hot exhaust into the cold aisles. Other mechanical improvements include adding skirting around the power distribution units and blanking plates as well as sealing cut-outs for cables to minimize air leaks.

Reducing Cooling Levels

Believe it or not, there is such a thing as too much cooling when it comes to data centers. We took a two-pronged approach to overcoming this issue. We discovered that the server fans were spinning faster than necessary, so we worked with the server manufacturers to optimize their fan speed control algorithm while keeping temperatures within the recommended range. For each server, this saves up to 3 watts and requires less air (up to 8 cubic feet per minute), which quickly adds up in a 56,000 square foot facility.

By analyzing airflows in each server room, we discovered that we didn’t need to use as many CRAH (computer room air handler) units than were available. So we shut down 15 CRAH units, reducing the installed demand by 114 kilowatts.

Raising Rack Inlet and Chiller Water Temperatures

We are also reducing our cooling levels by raising the set point temperature of the CRAH units while maintaining near uniform temperatures in the cold aisles. It’s a delicate balance; we can raise the temperature only so much before we need to use more energy by increasing the speed of the server fans. In the end, we raised the temperature for each CRAH unit’s return air to 81 degrees Fahrenheit from 72 degrees Fahrenheit.

Finally, we raised the chilled water temperature from 44 to 52 degrees Fahrenheit, reducing our chilled water system load by 171 tons per hour.

We’ve adopted some of these measures in our other data centers as well.

Improving data center efficiency is a moving target. We’re always exploring new ways in which we can improve the efficiency of our data centers, and we’ll continue to share our findings with the industry.

Dan Lee, P.E., and Veerendra Mulay, Ph.D, also contributed to this research.