Language translation is one of the ways we can give people the power to build community and bring the world closer together. It can help people connect with family members who live overseas, or better understand the perspective of someone who speaks a different language. We use machine translation to translate text in posts and comments automatically, in order to break language barriers and allow people around the world to communicate with each other.

Creating seamless, highly accurate translation experiences for the 2 billion people who use Facebook is difficult. We need to account for context, slang, typos, abbreviations, and intent simultaneously. To continue improving the quality of our translations, we recently switched from using phrase-based machine translation models to neural networks to power all of our backend translation systems, which account for more than 2,000 translation directions and 4.5 billion translations each day. These new models provide more accurate and fluent translations, improving people’s experience consuming Facebook content that is not written in their preferred language.

Sequence-to-sequence LSTM with attention: Using context

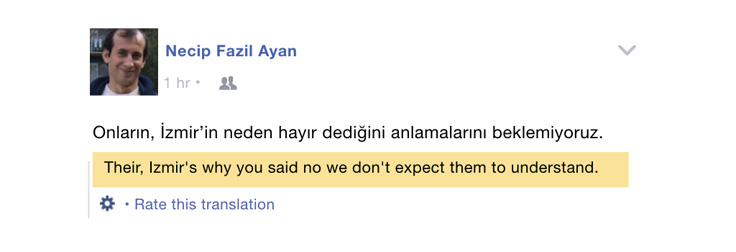

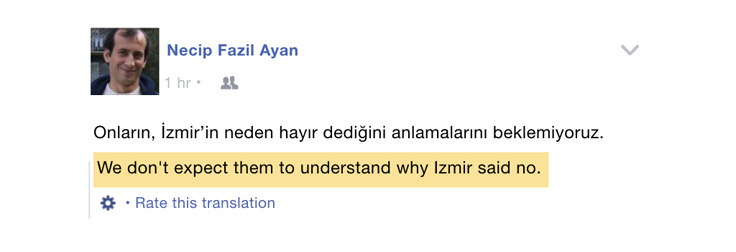

Our previous phrase-based statistical techniques were useful, but they also had limitations. One of the main drawbacks of phrase-based systems is that they break down sentences into individual words or phrases, and thus when producing translations they can consider only several words at a time. This leads to difficulty translating between languages with markedly different word orderings. To remedy this and build our neural network systems, we started with a type of recurrent neural network known as sequence-to-sequence LSTM (long short-term memory) with attention. Such a network can take into account the entire context of the source sentence and everything generated so far, to create more accurate and fluent translations. This allows for long-distance reordering, as encountered between English and Turkish, for example. Take the following translation produced by a phrase-based Turkish-to-English system:

Compare that with the translation produced by our new, neural net-based Turkish-to-English system:

With the new system, we saw an average relative increase of 11 percent in BLEU — a widely used metric for judging the accuracy of machine translation — across all languages compared with the phrase-based systems.

Handling unknown words

In many cases, a word in the source sentence doesn’t have a direct corresponding translation in the target vocabulary. When that happens, a neural system will generate a placeholder for the unknown word. In this case, we take advantage of the soft alignment that the attention mechanism produces between source and target words in order to pass the original source word through to the target sentence. Then we look up the translation of that word in a bilingual lexicon built from our training data and replace the unknown word in the target sentence. This method is more robust than using a traditional dictionary, especially for noisy input. For example, in English-to-Spanish translation, we are able to translate “tmrw” (tomorrow) into “mañana.” Though the addition of a lexicon brings only marginal improvements in BLEU score, it leads to higher translation ratings by people on Facebook.

Vocabulary reduction

A typical neural machine translation model calculates a probability distribution over all the words in the target vocabulary. The more words we include in this distribution, the more time the calculation takes. We use a modeling technique called vocabulary reduction to remedy this issue at both training and inference time. With vocabulary reduction, we combine the most frequently occurring words in the target vocabulary and a set of possible translation candidates for individual words of a given sentence to reduce the size of the target vocabulary. Filtering the target vocabulary reduces the size of the output projection layer, which helps make computation much faster without degrading quality too significantly.

Tuning model parameters

Neural networks almost always have tunable parameters that control things like the learning rate of the model. Picking the optimal set of these hyperparameters can be extremely beneficial to performance. However, this presents a significant challenge for machine translation at scale, since each translation direction is represented by a unique model with its own set of hyperparameters. Since the optimal values may be different for each model, we had to tune them for for each system in production separately. We ran thousands of end-to-end translation experiments over several months, leveraging the FBLearner Flow platform to fine-tune hyperparameters such as learning rate, attention type, and ensemble size. This had a major impact for some systems. For example, we saw a relative improvement of 3.7 percent BLEU for English to Spanish, based only on tuning model hyperparameters.

Scaling neural machine translation with Caffe2

One of the challenges with transitioning to a neural system was getting the models to run at the speed and efficiency necessary for Facebook scale. We implemented our translation systems in the deep learning framework Caffe2. Its down-to-the-metal and flexible nature allowed us to tune the performance of our translation models during both training and inference on our GPU and CPU platforms.

For training, we implemented memory optimizations such as blob recycling and blob recomputation, which helped us to train larger batches and complete training faster. For inference, we used specialized vector math libraries and weight quantization to improve computational efficiency. Early benchmarks on existing models indicated that the computational resources to support more than 2,000 translation directions would be prohibitively high. However, the flexible nature of Caffe2 and the optimizations we implemented gave us a 2.5x boost in efficiency, which allowed us to deploy neural machine translation models into production.

We follow the practice, common in machine translation, of using beam search at decoding time to improve our estimate of the highest-likelihood output sentence according to the model. We exploited the generality of the recurrent neural network (RNN) abstraction in Caffe2 to implement beam search directly as a single forward network computation, which gives us fast and efficient inference.

Over the course of this work, we developed RNN building blocks such as LSTM, multiplicative integration LSTM, and attention. We’re excited to share this technology as part of Caffe2 and to offer our learnings to the research and open source communities.

Ongoing work

The Facebook Artificial Intelligence Research (FAIR) team recently published research on using convolutional neural networks (CNNs) for machine translation. We worked closely with FAIR to bring this technology from research to production systems for the first time, which took less than three months. We launched CNN models for English-to-French and English-to-German translations, which brought BLEU quality improvements of 12.0 percent (+4.3) and 14.4 percent (+3.4), respectively, over the previous systems. These quality improvements make CNNs an exciting new development path, and we will continue our work to utilize CNNs for more translation systems.

We have just started being able to use more context for translations. Neural networks open up many future development paths related to adding further context, such as a photo accompanying the text of a post, to create better translations.

We are also starting to explore multilingual models that can translate many different language directions. This will help solve the challenge of fine-tuning each system relating to a specific language pair, and may also bring quality gains from some directions through the sharing of training data.

Completing the transition from phrase-based to neural machine translation is a milestone on our path to providing Facebook experiences to everyone in their preferred language. We will continue to push the boundaries of neural machine translation technology, with the aim of providing humanlike translations to everyone on Facebook.