Language translation is important to Facebook’s mission of making the world more open and connected, enabling everyone to consume posts or videos in their preferred language — all at the highest possible accuracy and speed.

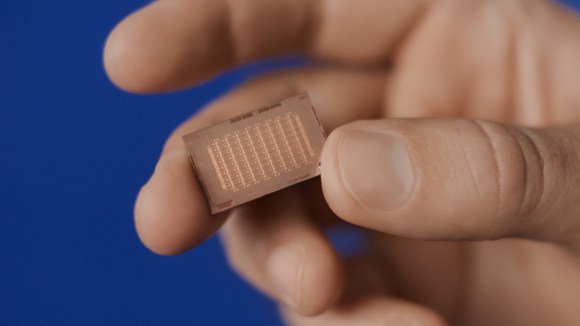

Today, the Facebook Artificial Intelligence Research (FAIR) team published research results using a novel convolutional neural network (CNN) approach for language translation that achieves state-of-the-art accuracy at nine times the speed of recurrent neural systems.1 Additionally, the FAIR sequence modeling toolkit (fairseq) source code and the trained systems are available under an open source license on GitHub so that other researchers can build custom models for translation, text summarization, and other tasks.

Why convolutional neural networks?

Originally developed by Yann LeCun decades ago, CNNs have been very successful in several machine learning fields, such as image processing. However, recurrent neural networks (RNNs) are the incumbent technology for text applications and have been the top choice for language translation because of their high accuracy.

Though RNNs have historically outperformed CNNs at language translation tasks, their design has an inherent limitation, which can be understood by looking at how they process information. Computers translate text by reading a sentence in one language and predicting a sequence of words in another language with the same meaning. RNNs operate in a strict left-to-right or right-to-left order, one word at a time. This is a less natural fit to the highly parallel GPU hardware that powers modern machine learning. The computation cannot be fully parallelized, because each word must wait until the network is done with the previous word. In comparison, CNNs can compute all elements simultaneously, taking full advantage of GPU parallelism. They therefore are computationally more efficient. Another advantage of CNNs is that information is processed hierarchically, which makes it easier to capture complex relationships in the data.

In previous research, CNNs applied to translation have not outperformed RNNs. Nevertheless, because of the architectural potential of CNNs, FAIR began research that has led to a translation model design showing strong performance of CNNs for translation. The greater computational efficiency of CNNs has the potential to scale translation and cover more of the world’s 6,500 languages.

State-of-the-art results at record speed

Our results demonstrate a new state-of-the-art compared with RNNs2 on widely used public benchmark data sets provided by the Conference on Machine Translation (WMT). When the CNN and the best RNN of similar size are trained in the same way, the CNN outperforms it by 1.5 BLEU on the WMT 2014 English-French task, a widely used metric for judging the accuracy of machine translation. On WMT 2014 English-German, the improvement is 0.5 BLEU, and on WMT 2016 English-Romanian, we improve by 1.8 BLEU.

One consideration with neural machine translation for practical applications is how long it takes to get a translation once we show the system a sentence. The FAIR CNN model is computationally very efficient and is nine times faster than strong RNN systems. Much research has focused on speeding up neural networks through quantizing weights or distillation, to name a few methods, and those can be equally applied to the CNN model to increase speed even more, suggesting significant future potential.

Better translation with multi-hop attention and gating

A distinguishing component of our architecture is multi-hop attention. An attention mechanism is similar to the way a person would break down a sentence when translating it: Instead of looking at the sentence only once and then writing down the full translation without looking back, the network takes repeated “glimpses” at the sentence to choose which words it will translate next, much like a human occasionally looks back at specific keywords when writing down a translation.3 Multi-hop attention is an enhanced version of this mechanism, which allows the network to make multiple such glimpses to produce better translations. These glimpses also depend on each other. For example, the first glimpse could focus on a verb and the second glimpse on the associated auxiliary verb.

In the figure below, we show when the system reads a French phrase (encoding) and then outputs an English translation (decoding). We first run the encoder to create a vector for each French word using a CNN, and the computation is done simultaneously. Next, the decoder CNN produces English words, one at a time. At every step, the attention glimpses the French sentence to decide which words are most relevant to predict the next English word in the translation. There are two so-called layers in the decoder, and the animation illustrates how the attention is done once for each layer. The strength of the green lines indicates how much the network focuses on each French word. When the network is being trained, the translation is always available, and the computation for the English words also can be done simultaneously.

Another aspect of our system is gating, which controls the information flow in the neural network. In every neural network, information flows through so-called hidden units. Our gating mechanism controls exactly which information should be passed on to the next unit so that a good translation can be produced. For example, when predicting the next word, the network takes into account the translation it has produced so far. Gating allows it to zoom in on a particular aspect of the translation or to get a broader picture — all depending on what the network deems appropriate in the current context.

Future developments

This approach is an alternative architecture for machine translation that opens up new possibilities for other text processing tasks. For example, multi-hop attention in dialogue systems allows neural networks to focus on distinct parts of the conversation, such as two separate facts, and to tie them together in order to better respond to complex questions.

References

[1] Convolutional Sequence to Sequence Learning. Jonas Gehring, Michael Auli, David Grangier, Denis Yarats, Yann N. Dauphin. arXiv, 2017

[2] Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V. Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, Yuan Cao, Qin Gao, Klaus Macherey, Jeff Klingner, Apurva Shah, Melvin Johnson, Xiaobing Liu, Łukasz Kaiser, Stephan Gouws, Yoshikiyo Kato, Taku Kudo, Hideto Kazawa, Keith Stevens, George Kurian, Nishant Patil, Wei Wang, Cliff Young, Jason Smith, Jason Riesa, Alex Rudnick, Oriol Vinyals, Greg Corrado, Macduff Hughes, Jeffrey Dean. Technical Report, 2016.

[3] Neural Machine Translation by Jointly Learning to Align and Translate. Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio. International Conference on Learning Representations, 2015.