Thrift v. 1

At Facebook, we place a lot of emphasis on choosing the best tools and implementations for our backend services, regardless of programming language. We use various programming languages on a case-by-case basis to optimize for the right combination of performance, ease and speed of development, availability of existing libraries, and so on. To support this practice, in 2006 we created Thrift, a cross-language framework for handling RPC, including serialization/deserialization, protocol transport, and server creation. Since then, usage of Thrift at Facebook has continued to grow. Today, it powers more than 100 services used in production, most of which are written in C++, Java, PHP, or Python.

After using Thrift for a year internally, we open-sourced it in 2007. Since then, as we continued to gain experience running Thrift infrastructure inside Facebook, we found two things:

1) Thrift was missing a core set of features

2) We could do a lot more for performance

For example, one issue we ran into was that internal service owners were constantly re-inventing the same features again and again — such as transport compression, authentication, and counters — to track the health of their servers. Engineers were also spending a lot of time trying to eke out more performance from their services. Outside of Facebook, Thrift gained wide use as a serialization and RPC framework, but ran in to similar performance concerns and issues separating the serialization and transport logic.

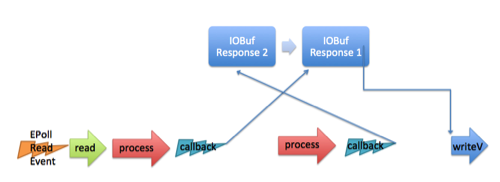

Over time, we found that parallel processing of requests from the same client and out-of-order responses solved many of the performance issues. The benefits of the former are obvious; the latter helps avoid application-level, head-of-line blocking. But we still had to address the need for more features.

Evolving the architecture

When Thrift was originally conceived, most services were relatively straightforward in design. A web server would make a Thrift request to some backend service, and the service would respond. But as Facebook grew, so did the complexity of the services. Making a Thrift request was no longer so simple. Not only did we have tiers of services (services calling other services), but we also started seeing unique feature demands for each service, such as the various compression or trace/debug needs. Over time it became obvious that Thrift was in need of an upgrade for some of our specific use cases. In particular, we sought to improve performance for asynchronous workloads, and we wanted a better way to support per-request features.

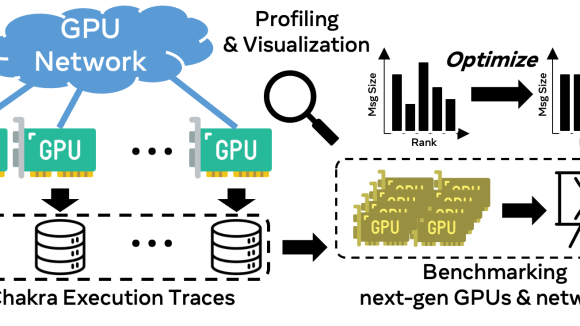

To improve asynchronous workload performance, we updated the base Thrift transport to be folly’s IOBuf class, a chained memory buffer with views similar to BSD’s mbuf or Linux’s sk_buff. In earlier versions of Thrift, the same memory buffer was reused for all requests, but memory management quickly became tricky to use when we tried to update the buffer to send responses out of order. Instead, we now request new buffers from the memory allocator on every request. To reduce the performance impact of allocating new buffers, we allocate constant-sized buffers from JEMalloc to hit the thread-local buffer cache as often as possible. Hitting the thread-local cache was an impressive performance improvement — for the average Thrift server, it’s just as fast as reusing or pooling buffers, without any of the complicated code. These buffers are then chained together to become as large as needed, and freed when not needed, preventing some memory issues seen in previous Thrift servers where memory was pooled indefinitely. In order to support these chained buffers, all of the existing Thrift protocols had to be rewritten.

Out-of-order, chained buffers

To allow for per-request attributes and features, we introduced a new THeader protocol and transport. Thrift was previously limited in the fields that could be used to add per-request information, and they were hard to access. As Thrift evolved, we wanted a way to allow service owners to add new features without making changes to the core Thrift libraries or breaking backward compatibility. For example, if a service wanted to start compressing some responses or change timeouts, this should be easy to do without having to completely change the transport used. The THeader format is very similar to HTTP headers — each request passes along headers that the server can interpret. With some clever programming, it was possible to make the THeader format backward compatible with all the previous Thrift transports and protocols.

Re-open-sourcing Thrift as fbthrift

Today we are releasing our evolved internal branch of Thrift (which we are calling fbthrift) on github. The largest changes can be found in the new C++ code generator, available as the new target language, cpp2. The branch also includes all the header transport and protocol changes for several languages, including C++, Python, and Java. For Java users, we expect similar changes to be reflected in Swift and Nifty.

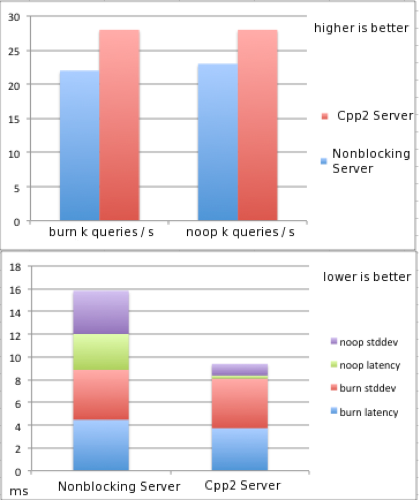

Both the new Thrift C++ generated code and THeader format are being used by a number of Thrift services at Facebook. Service requests that go between data centers are dynamically compressed based on the size of the message, while in-rack requests skip compression (and thus avoid the CPU hit). A number of services that have moved to the new cpp2 generated code have seen up to a 50% decrease in latency, and/or large decreases in memory footprint.

Additionally, the new C++ async code is a dependency for newer HHVM releases.

Latency improvements with out-of-order responses

Since Facebook open-sourced Thrift to the community in 2007, Apache Thrift has become a ubiquitous piece of software for backend and embedded systems operating at scale. Although not all Apache Thrift changes are reflected in fbthrift, we track the upstream closely and hope to work with the maintainers to incorporate this work.

Between the new C++ generated code, THeader format, and other changes available in fbthrift, we’ve gained a lot from our evolution to fbthrift. We hope that others will also benefit from our experiences running Thrift infrastructure at scale.

References

1. Thrift: Scalable Cross-Language Services Implementation

2. Folly IOBuf

3. fbthrift

6. HHVM