- Moving from equirectangular layouts to a cube format in 360 video reduced file size by 25 percent against the original.

- We’re making the source code available for the custom filter we use to transform 360 videos into the cube format.

- 360 video for VR comes with a unique set of challenges, including even bigger file sizes and streaming without waiting for buffering.

- Encoding 360 video with a pyramid geometry reduces file size by 80 percent against the original.

- View-dependent adaptive bit rate streaming allows us to optimize the experience in VR.

Video is an increasingly popular means of sharing our experiences and connecting with the things and people we care about. Both 360 video and VR create immersive environments that engender a sense of connectedness, detail, and intimacy.

Of course, all this richness creates a new and difficult set of engineering challenges. The file sizes are so large they can be an impediment to delivering 360 video or VR in a quality manner at scale. We’ve reached a couple of milestones in that effort, building off traditional mapping techniques that have been powerful tools in computer graphics, image processing, and compression. We took these well-known ideas and extended them in a couple of ways to meet the high bandwidth and quality needs of the next-generation displays. First, we’ll discuss our work around our 360 video filter and its source code, which we’ll be making available today.

We encountered several engineering challenges while building 360 video for News Feed. Our primary goal was to tackle the drawback of the standard equirectangular layout for 360 videos, which flattens the sphere around the viewer onto a 2D surface. This layout creates warped images and contains redundant information at the top and bottom of the image — much like Antarctica is stretched into a linear landmass on a map even though it’s a circular landmass on a globe.

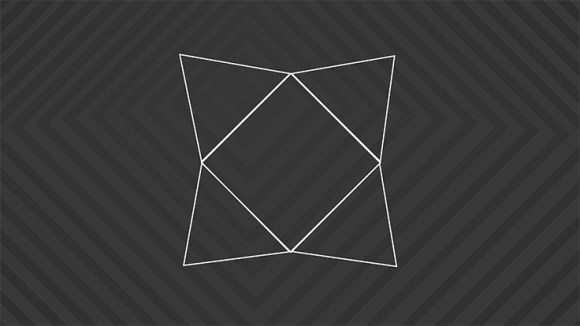

Our solution was to remap equirectangular layouts to cube maps. We did this by transforming the top 25 percent of the video to one cube face and the bottom 25 percent to another, dividing the middle 50 percent into the four remaining cube faces, and then later aligning them in two rows. This reduced file size by 25 percent against the original, which is an efficiency that matters when working at Facebook’s scale.

There are a few additional benefits of using cube maps for videos:

- Cube maps don’t have geometry distortion within the faces. So each face looks exactly as if you were looking at the object head-on with a perspective camera, which warps or transforms an object and its surrounding area. This is important because video codecs assume motion vectors as straight lines. And that’s why it encodes better than with bended motions in equirectangular.

- Cube maps’ pixels are well-distributed — each face is equally important. There are no poles as in equirectangular projection, which contains redundant information.

- Cube maps are easier to project. Each face is mapped only on the corresponding face of the cube.

Cube maps have been used in computer graphics for a long time, mostly to create skyboxes and reflections, and we wanted to bring the same capability to anyone who wants to stream high-quality video. Today, we’re making the source code available on GitHub for a filter, implemented as a plug-in for ffmpeg, that transforms user-uploaded 360 video from an equirectangular layout into the cubemap format for streaming. We’re eager for people to adopt this tool, and we can’t wait to see how developers build on top of it.

Optimizing 360 video for VR

One of the main challenges of 360 video is the file size. Cube maps helped us decrease the bit rate and save storage, and do it quickly so people wouldn’t have to wait for buffering, all while maintaining the video quality and resolution. These technical hurdles are magnified when we want to play 360 video in VR. The maximum visual resolution in the GearVR, for example, is 6K — and that’s just for one eye. A 6K stereo video at 60 fps is roughly 20x larger than a full HD video, with an average bit rate of 245 Mbps. However, most mobile hardware can play only 4K video.

We can get around this by implementing view-dependent streaming. Out of the full 360-degree frame, we stream only what can be seen in the field of vision, which is 96 degrees for GearVR, for example. This results in an FOV resolution less than 4K and can be decoded with current mobile hardware.

While this resolves the size problem without sacrificing quality, it’s still not optimal. The hallmark of 360 video is that it’s an immersive experience in which the user controls the perspective. In VR especially, we have to account for quick or continuous head movement. If we stream only a defined viewport and the head shifts slightly, part of the new FOV won’t have an image. So we need to stream more than just the visible FOV.

Pyramid encoding

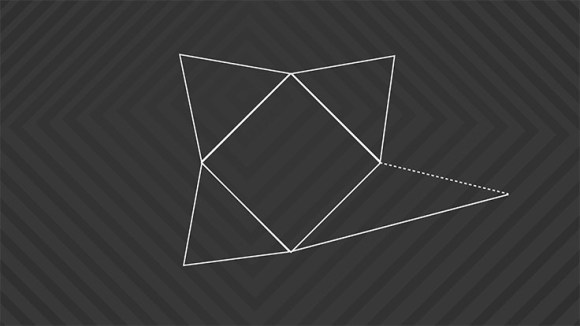

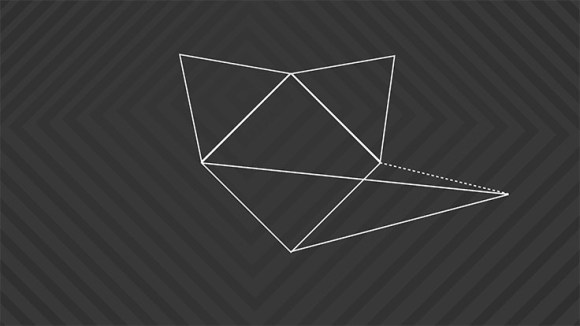

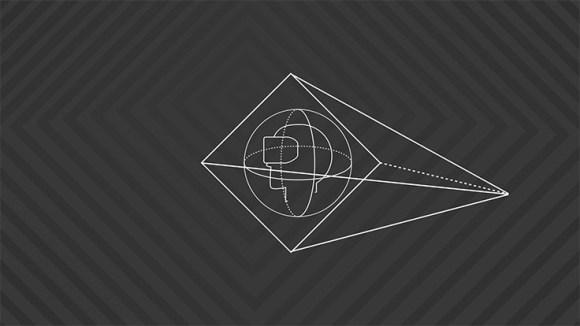

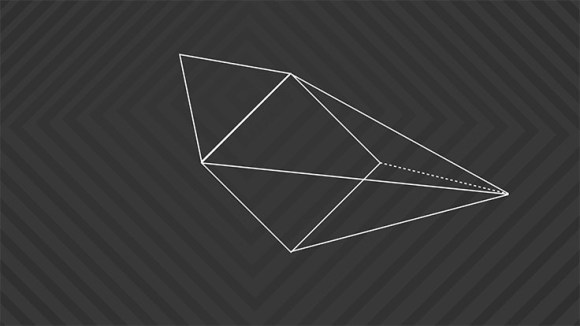

With cube maps, we put a sphere inside a cube, wrap an equirectangular image of a frame around the sphere, and then expand the sphere until it fills the cube. We built on this idea by taking a pyramid geometry and applying it to 360 video for VR.

We start by putting a sphere inside a pyramid, so that the base of the pyramid is the full-resolution FOV and the sides of the pyramid gradually decrease in quality until they reach a point directly opposite from the viewport, behind the viewer. We can unwrap the sides and stretch them slightly to fit the entire 360 image into a rectangular frame, which makes processing easier and reduces the file size by 80 percent against the original.

Unlike in cube maps, where every face is treated equally, in pyramids only the viewport is rendered in full resolution. So when the viewer shifts perspective, instead of looking at a different face of the pyramid, he or she hops into a new one. In total, there are 30 viewports covering the sphere, separated by about 30 degrees.

When a 360 video is uploaded, we transform it from an equirectangular layout to the pyramid format for each of the 30 viewports, and we create five different resolutions for each stream, for a total of 150 different versions of the same video. We chose to pre-generate the videos and store them on the server rather than encode them in real time for each client request. While this requires more storage and can affect the latency when switching between viewports, it has a simple server-side setup and doesn’t require additional software.

The viewport is constantly changing, so it doesn’t make sense to buffer entire streams from start to finish. We split each video stream into one-second GOPs and implement view-dependent adaptive bit rate streaming for playback. Every second (or less), we determine which stream to fetch next based on the viewer’s network conditions, viewport orientation, and time until the next GOP. We have to time this decision carefully; not waiting long enough could result in fetching a stream in the wrong direction or at the wrong resolution, but waiting too long would cause buffering.

We improve our algorithm by running multiple iterations of a test in a controlled network environment, logging information such as the angular distance from the center of the viewport to the viewer’s direction, time spent in each viewport, viewport resolution and switch latency, buffering timing, and network bandwidth. For the future, we’re working on a machine-learned cost function for selecting which video chunk to fetch as well as head-orientation predictions.

Building for a new generation of immersive content will mean solving new and harder engineering challenges. We’re excited to continue making progress with 360 videos, and we look forward to sharing new tools and learnings with the engineering community.